JUNE 11, 2024 by Rachel Gordon, Massachusetts Institute of Technology

Collected at : https://techxplore.com/news/2024-06-algorithm-language-videos.html

Mark Hamilton, an MIT Ph.D. student in electrical engineering and computer science and affiliate of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), wants to use machines to understand how animals communicate. To do that, he set out first to create a system that can learn human language “from scratch.”

“Funny enough, the key moment of inspiration came from the movie ‘March of the Penguins.’ There’s a scene where a penguin falls while crossing the ice, and lets out a little belabored groan while getting up. When you watch it, it’s almost obvious that this groan is standing in for a four letter word. This was the moment where we thought, maybe we need to use audio and video to learn language.” says Hamilton. “Is there a way we could let an algorithm watch TV all day and from this figure out what we’re talking about?”

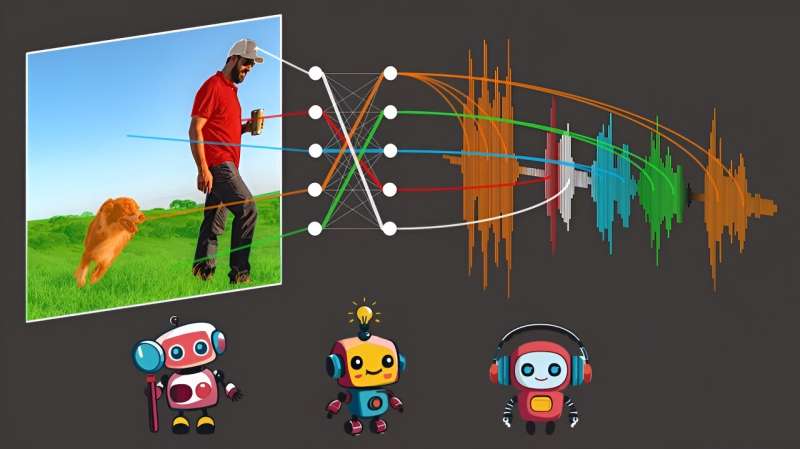

“Our model, DenseAV, aims to learn language by predicting what it’s seeing from what it’s hearing, and vice-versa. For example, if you hear the sound of someone saying ‘bake the cake at 350’ chances are you might be seeing a cake or an oven. To succeed at this audio-video matching game across millions of videos, the model has to learn what people are talking about,” says Hamilton.

A paper describing the work appears on the arXiv preprint server.

Once they trained DenseAV on this matching game, Hamilton and his colleagues looked at which pixels the model looked for when it heard a sound. For example, when someone says “dog,” the algorithm immediately starts looking for dogs in the video stream. By seeing which pixels are selected by the algorithm, one can discover what the algorithm thinks a word means.

Interestingly, a similar search process happens when DenseAV listens to a dog barking: It searches for a dog in the video stream.

“This piqued our interest. We wanted to see if the algorithm knew the difference between the word ‘dog’ and a dog’s bark,” says Hamilton. The team explored this by giving the DenseAV a “two-sided brain.” Interestingly, they found one side of DenseAV’s brain naturally focused on language, like the word “dog,” and the other side focused on sounds like barking. This showed that DenseAV not only learned the meaning of words and the locations of sounds, but also learned to distinguish between these types of cross-modal connections, all without human intervention or any knowledge of written language.

One branch of applications is learning from the massive amount of video published to the internet each day.

“We want systems that can learn from massive amounts of video content, such as instructional videos,” says Hamilton. “Another exciting application is understanding new languages, like dolphin or whale communication, which don’t have a written form of communication. Our hope is that DenseAV can help us understand these languages that have evaded human translation efforts since the beginning. Finally, we hope that this method can be used to discover patterns between other pairs of signals, like the seismic sounds the earth makes and its geology.”

A formidable challenge lay ahead of the team: Learning language without any text input. Their objective was to rediscover the meaning of language from a blank slate, avoiding using pre-trained language models. This approach is inspired by how children learn by observing and listening to their environment to understand language.

More information: Mark Hamilton et al, Separating the “Chirp” from the “Chat”: Self-supervised Visual Grounding of Sound and Language, arXiv (2024). arxiv.org/abs/2406.05629

Journal information: arXiv

Leave a Reply