By Chris Hoffman May 15, 2024

Collected at : https://www.computerworld.com/article/2106043/private-ai-chatbots-windows-pc.html

ChatGPT, Microsoft Copilot, and Google Gemini all run on servers in distant data centers, even as the PC industry works on moving generative AI (genAI) chatbots onto your PC. But you don’t have to wait for that to happen — in a few clicks, you can already install and run large language models (LLMs) on your own Windows PC.

These open-source AI chatbots offer big benefits: You can use them without an internet connection, and your conversations and data will stay on your PC — not sent off to a server somewhere. Also, you can choose from a wide variety of different genAI models built with different purposes in mind.

It’s clear why this is the future of AI in business and everywhere else: Everyone will be able to run chatbots that fit their needs on their own hardware, ensuring sensitive data isn’t sent off to another company’s data center.

Microsoft is likely on the cusp of announcing the ability to run parts of Copilot locally on your computer — that’s the point of the neural processing units in new PCs and the big “AI PC” push in general. But you can get an open-source AI chatbot on your PC this minute, if you know where to look.

How to run private AI chatbots with Ollama

Ollama is an open-source project that makes it easy to install and run genAI models — those chatbots, in other words — on Windows PCs. There’s no configuration required: Ollama will automatically use available PC hardware — like powerful NVIDIA graphics hardware — to accelerate the AI models, if necessary. The response speed will depend on the hardware in your computer, but it’ll work on a wide range of PCs.

The tool is named after Meta’s Llama LLM, but you can use it to download and run a wide variety of other models, including those from Google and Microsoft. All you need to do is download the installer, click the “Install” button, and type three words to pull up your chatbot of choice. It is a text-based interface — but chatbots are text-based interfaces already.

To install and use Ollama, head to the Ollama website. Download and run the installer for Windows PCs — it works on both Windows 10 and 11. (Ollama also runs on macOS and Linux.) Just run the setup file and click “Install” — it’s a simple, one-click process.

Once that’s done, you click the Ollama notification that appears. Or, you can just open a command-line window: Terminal, Command Prompt, or PowerShell will all work.

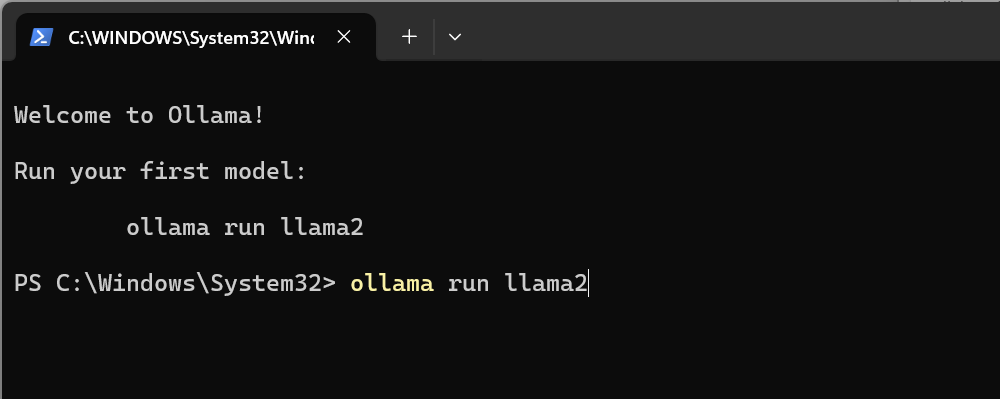

To do this, right-click your Start button or press Windows+X. Then, click the “Terminal,” “Command Prompt,” or “Windows PowerShell” option in the menu. To run the Llama2 AI model for which Ollama is named, just type the following command at the command line and press Enter: ollama run llama2

That’s it! Ollama will do all the work, downloading the AI model and the files it requires. You’ll see a progress bar at the command line while it finishes the download.

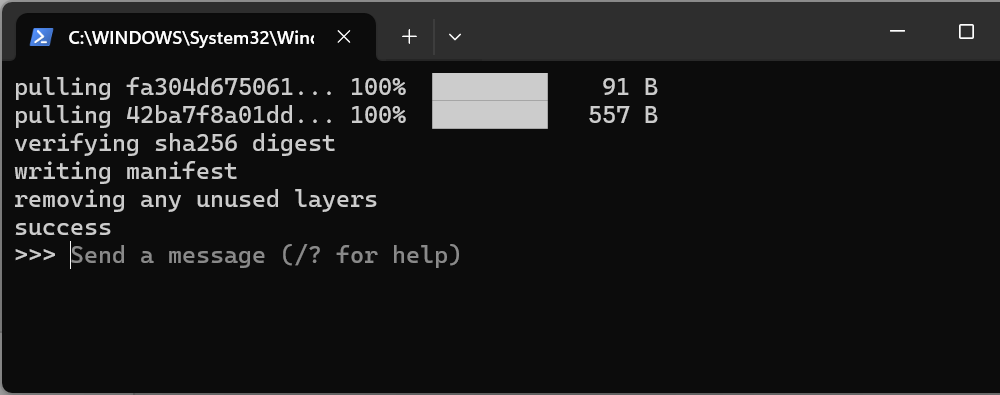

It might take a few minutes to get what you need the first time; Ollama is downloading a few gigabytes of data (about 3.8 GB). So, much depends on the speed of your connection. When it’s ready, you’ll see the following line:

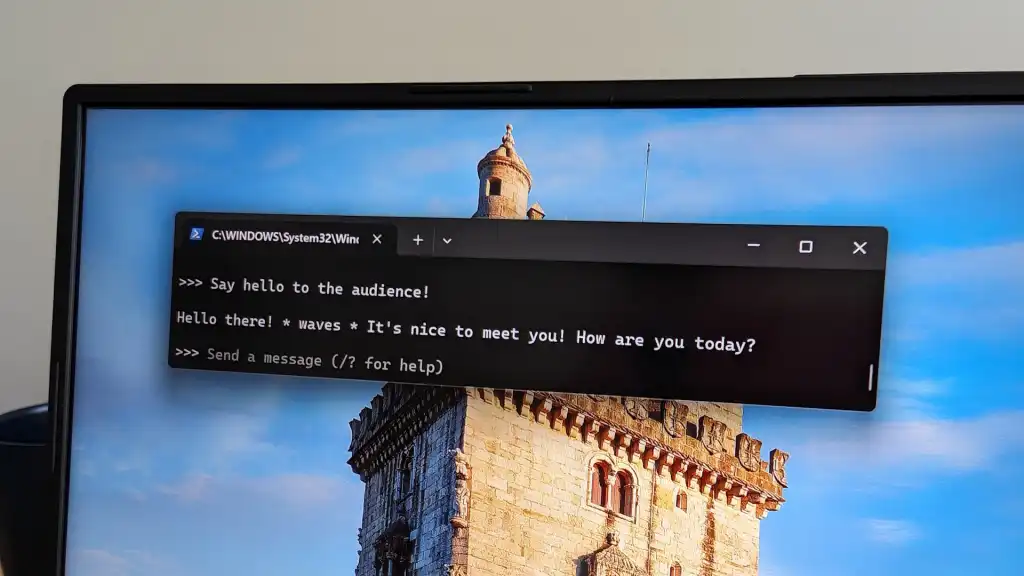

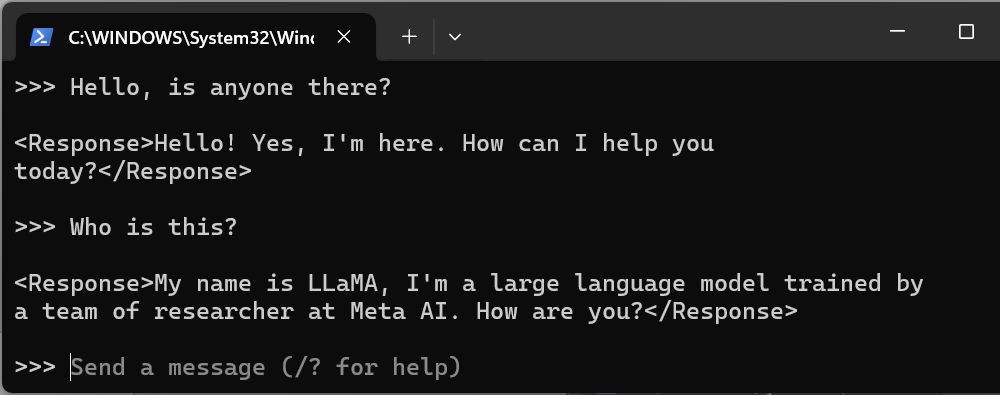

You can then type a message and press Enter. You’ll get a response from the AI chatbot you downloaded — the llama2 model, in this case.

Remember, once downloaded this is all running 100% on your computer. You can use it entirely offline, if you like — you’re not depending on a big company’s servers and your conversations remain private on your device.

Want to copy and paste text from an email or document? You can copy text from the terminal with the mouse — select the text and right click. To paste text, right-click in the terminal. Simple!

You can type /bye and press Enter to close the session with your chatbot.

When you want to use it again, you use the same command:

ollama run llama2

This time, since the chatbot files have already been downloaded, you’ll be able to use it instantly.

The Ollama website has a long list of AI models you can install and try. For example, you can try Meta’s new Llama3 model, Microsoft’s Phi-3 Mini model, Google’s Gemma model, or Mistral AI’s Mistral model. Just use the same command, and specify the model you want to use. For example, you can use the following commands:

- ollama run llama3

- ollama run phi3

- ollama run gemma

- ollama run mistral

In the future, we’ll probably see nicer graphical interfaces built on top of tools like Ollama. There’s already a great Open WebUI project that looks very similar to ChatGPT’s web interface, but requires Docker to install — it’s not quite as “click and run” as Ollama.

Want something faster, or just want to skip the installation process? You can access many of these chatbots in your web browser with the HuggingChat tool. It’s a free web interface for accessing these chatbots. (But with HuggingChat, they’re running on the HuggingChat servers, not on your own PC.)

Other tools for running private AI chatbots on your PC

Ollama is just one of many tools for accessing open-source chatbots. Here are a few other options worth a shot:

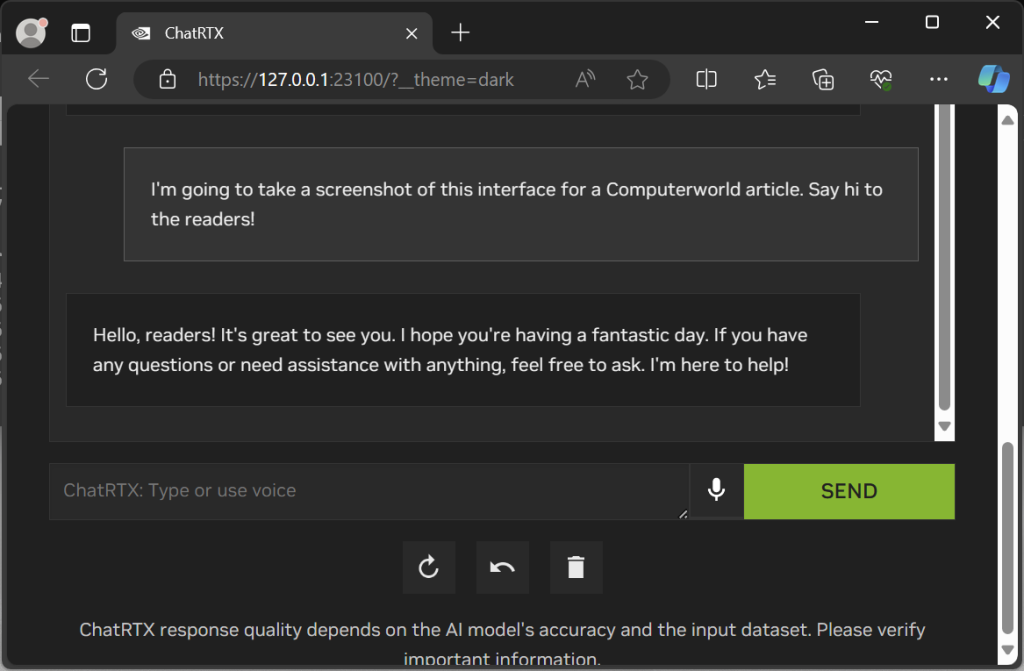

Nvidia’s ChatRTX requires a PC with recent Nvidia graphics hardware. Specifically, you need a PC with either an RTX 30- or 40-series GPU and at least 8GB of VRAM. If you meet that requirement, you’re getting a convenient web-based interface for models like Mistral and Llama 2, one that’s optimized for running on Nvidia’s hardware. You can also provide notes or documents and use Nvidia’s ChatRTX to “chat with your files,” asking questions about their contents. (Nvidia just added support for additional AI models like Gemma to Chat With RTX.)

You can also try GPT4All, which offers a nice little graphical interface. PCWorld recommended it in early February — a few weeks before Ollama launched on Windows. It’s a capable tool, but has fewer AI models available than Ollama. Like Ollama, it runs on a wide range of PC hardware — unlike Nvidia’s ChatRTX, it doesn’t need high-end recent hardware.

Leave a Reply