December 19, 2024 by Ingrid Fadelli , Tech Xplore

Collected at: https://techxplore.com/news/2024-12-ai-powered-algorithm-enables-personalized.html

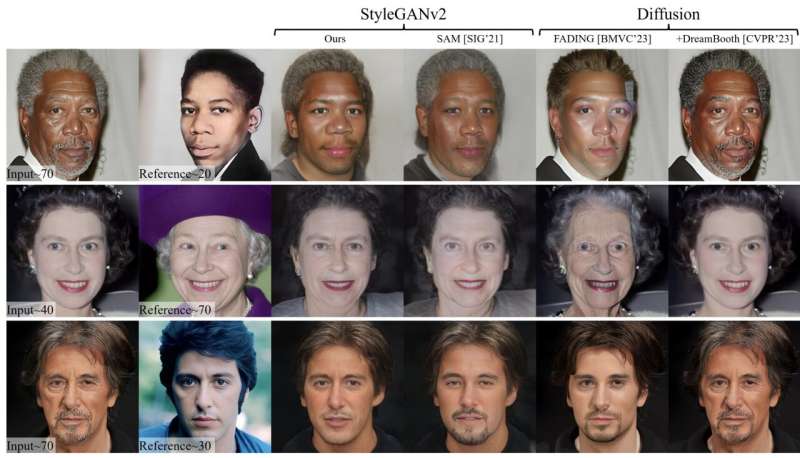

Researchers at University of North Carolina at Chapel Hill and University of Maryland recently developed MyTimeMachine (MyTM), a new AI-powered method for personalized age transformation that can make human faces in images or videos appear younger or older, accounting for subjective factors influencing aging.

This algorithm, introduced in a paper posted to the arXiv preprint server, could be used to broaden or enhance the features of consumer-facing picture-editing platforms, but could also be a valuable tool for the film, TV and entertainment industries.

“Virtual aging techniques are widely used in visual effects (VFX) in movies, but they require good prosthetics and makeup, often tiresome and inconvenient for actors to wear regularly during shooting,” Roni Sengupta, the researcher who supervised the study, told Tech Xplore.

“The inspiration for this work came from the 2019 film ‘The Irishman,’ which used groundbreaking VFX to digitally re-age actors. While visually impressive, the process required significant manual effort, high costs, and substantial computational resources, limiting its broader use.”

While there are now also social media filters that can age users or make them look younger, the effects they produce are often too simplistic, almost cartoon-like. For instance, they can simply blur faces to make them look younger or add wrinkles to make people look older.

“Real aging transforms facial shape along with texture and is often highly dependent on various factors such as ethnicity, gender, genetics, lifestyle and health conditions,” explained Sengupta. “This motivated us to ask: Could age transformations be made more accessible, fully automatic, and highly realistic? To address this, we developed a neural network-based method that simulates a person’s entire lifespan—from youth to old age—using only a limited set of selfies.”

https://www.youtube.com/embed/hENjfX8A7V0?color=whiteCredit: Luchao Qi et al

MyTM, the new age transformation model created by the researchers, is based on a generative artificial neural network. This algorithm was trained to simulate the entire aging trajectory of specific people, producing realistic images at different life stages.

“Using about 50 input images, say spanning the past 20 years, MyTM leverages generative modeling to predict realistic aging progressions from 0 to 100 years old,” said Luchao Qi, the graduate student leading the project. “Unlike traditional methods that rely on extensive datasets for plausible results, our approach delivers accurate, realistic, and personalized aging changes like skin texture and facial structure by leveraging a few images of that person only.”

In initial tests, the new model developed by Qi, Sengupta and their colleagues was found to produce highly realistic and personalized simulations showing the faces of specific people at different stages of their lives. Notably, MyTM also accounts for external factors that can influence aging, such as ethnicity, lifestyle choices and genetics.

“The most notable achievement of MyTM is its ability to produce consistent, lifelike aging simulations from ~50 selfies,” said Qi. “One practical application of the model could be in film and VFX, as it could be used to streamline aging and de-aging in movies, reducing costs and time while ensuring high-quality results.

“Currently, most low/medium-budget films have poor re-aging VFX, and high-budget films require heavy prosthetics or manual post-processing. Our method can make re-aging effects accessible and high-quality for all kinds of content creators and movie makers.”

MyTM could also enable the creation of more realistic personalized content, for instance by improving filters for social media, and producing better simulations for marketing or health awareness campaigns. Finally, the model could be used to create new platforms designed to offer emotional support for people who are grieving the loss of a loved one.

“We found that our past attempts at age transformation received several requests from grieving mothers and family members,” said Sengupta. “In our next studies, we plan to further reduce MyTM’s input requirements. Currently, the model requires around 50 images, but we plan to refine the system to perform effectively with just a handful of images, while maintaining their quality.”

The researchers also plan to improve the efficiency and speed of their system, as this would facilitate its use in dynamic settings, for instance, for aging or de-aging live virtual avatars and faces in interactive media. This could be achieved by utilizing more advanced generative algorithms, such as diffusion models.

More information: Luchao Qi et al, MyTimeMachine: Personalized Facial Age Transformation, arXiv (2024). DOI: 10.48550/arxiv.2411.14521

Journal information: arXiv

Leave a Reply