December 3, 2024 by Ken Kingery, Duke University

Collected at: https://techxplore.com/news/2024-12-platform-ai-constant-nuanced-human.html

During your first driving class, the instructor probably sat next to you, offering immediate advice on every turn, stop and minor adjustment. If it was a parent, they might have even grabbed the wheel a few times and shouted “Brake!” Over time, those corrections and insights developed experience and intuition, turning you into an independent, capable driver.

Although advancements in artificial intelligence (AI) have made self-driving cars a reality, the teaching methods used to train them remain a far cry from even the most nervous side-seat driver. Rather than nuance and real-time instruction, AI learns primarily through massive datasets and extensive simulations, regardless of the application.

Now, researchers from Duke University and the Army Research Laboratory have developed a platform to help AI learn to perform complex tasks more like humans. Nicknamed GUIDE for short, the AI framework will be showcased at the upcoming Conference on Neural Information Processing Systems (NeurIPS 2024), taking place Dec. 9–5 in Vancouver, Canada. The work is also available on the arXiv preprint server.

“It remains a challenge for AI to handle tasks that require fast decision making based on limited learning information,” explained Boyuan Chen, professor of mechanical engineering and materials science, electrical and computer engineering, and computer science at Duke, where he also directs the Duke General Robotics Lab.

“Existing training methods are often constrained by their reliance on extensive pre-existing datasets while also struggling with the limited adaptability of traditional feedback approaches,” Chen said. “We aimed to bridge this gap by incorporating real-time continuous human feedback.”

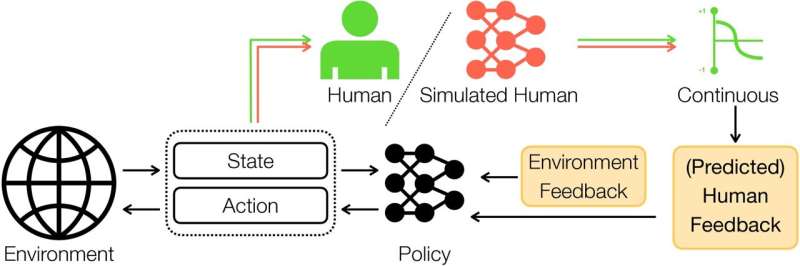

GUIDE functions by allowing humans to observe AI’s actions in real-time and provide ongoing, nuanced feedback. It’s like how a skilled driving coach wouldn’t just shout “left” or “right,” but instead offer detailed guidance that fosters incremental improvements and deeper understanding.

In its debut study, GUIDE helps AI learn how best to play hide-and-seek. The game involves two beetle-shaped players, one red and one green. While both are controlled by computers, only the red player is working to advance its AI controller.

The game takes place on a square playing field with a C-shaped barrier in the center. Most of the playing field remains black and unknown until the red seeker enters new areas to reveal what they contain.

As the red AI player chases the other, a human trainer provides feedback on its searching strategy. While previous attempts at this sort of training strategy have only allowed for three human inputs—good, bad or neutral—GUIDE has humans hover a mouse cursor over a gradient scale to provide real-time feedback.

The experiment involved 50 adult participants with no prior training or specialized knowledge, which is by far the largest-scale study of its kind. The researchers found that just 10 minutes of human feedback led to a significant improvement in the AI’s performance. GUIDE achieved up to a 30% increase in success rates compared to current state-of-the-art human-guided reinforcement learning methods.

“This strong quantitative and qualitative evidence highlights the effectiveness of our approach,” said Lingyu Zhang, the lead author and a first-year Ph.D. student in Chen’s lab. “It shows how GUIDE can boost adaptability, helping AI to independently navigate and respond to complex, dynamic environments.”

The researchers also demonstrated that human trainers are only really needed for a short period of time. As participants provided feedback, the team created a simulated human trainer AI based on their insights within particular scenarios at particular points in time. This allows the seeker AI to continually train long after a human has grown weary of helping it learn. Training an AI “coach” that isn’t as good as the AI it’s coaching may sound counterintuitive, but as Chen explains, it’s actually a very human thing to do.

“While it’s very difficult for someone to master a certain task, it’s not that hard for someone to judge whether or not they’re getting better at it,” Chen said. “Lots of coaches can guide players to championships without having been a champion themselves.”

Another fascinating direction for GUIDE lies in exploring the individual differences among human trainers. Cognitive tests given to all 50 participants revealed that certain abilities, such as spatial reasoning and rapid decision-making, significantly influenced how effectively a person could guide an AI. These results highlight intriguing possibilities such as enhancing these abilities through targeted training and discovering other factors that might contribute to successful AI guidance.

These questions point to an exciting potential for developing more adaptive training frameworks that not only focus on teaching AI but also on augmenting human capabilities to form future human-AI teams. By addressing these questions, researchers hope to create a future where AI learns not only more effectively but also more intuitively, bridging the gap between human intuition and machine learning, and enabling AI to operate more autonomously in environments with limited information.

“As AI technologies become more prevalent, it’s crucial to design systems that are intuitive and accessible for everyday users,” said Chen. “GUIDE paves the way for smarter, more responsive AI capable of functioning autonomously in dynamic and unpredictable environments.”

The team envisions future research that incorporates diverse communication signals using language, facial expressions, hand gestures and more to create a more comprehensive and intuitive framework for AI to learn from human interactions. Their work is part of the lab’s mission toward building the next-level intelligent systems that team up with humans to tackle tasks that neither AI nor humans alone could solve.

More information: Lingyu Zhang et al, GUIDE: Real-Time Human-Shaped Agents, arXiv (2024). DOI: 10.48550/arxiv.2410.15181

Journal information: arXiv

Leave a Reply