November 25, 2024 by Bob Yirka , Phys.org

Collected at: https://phys.org/news/2024-11-google-deepmind-ai-based-decoder.html

A team of AI researchers at Google DeepMind, working with a team of quantum researchers at Google Quantum AI, announced the development of an AI-based decoder that identifies quantum computing errors.

In their paper published in the journal Nature, the group describes how they used machine learning to help find qubit errors more efficiently than other methods. Nadia Haider, with the Delft University of Technology’s, Quantum Computing Division of QuTech and the Department of Microelectronics, has published a News and Views piece in the same journal issue outlining the work done by the team at Google.

One of the main sticking points preventing the development of a truly useful quantum computer is the issue of error correction. Qubits tend to be fragile, which means their quality can be less than desired, resulting in errors. In this new effort, the combined team of researchers at Google has taken a new approach to solving the problem—they have developed an AI-based decoder to help identify such errors.

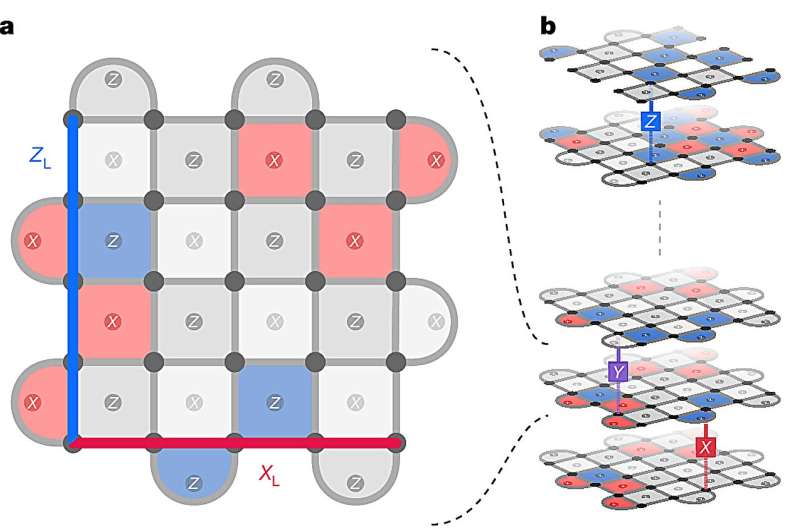

Over the past several years, Google’s Artificial Intelligence division has developed and worked on a quantum computer called Sycamore. To conduct quantum computing, it creates single logical qubits using multiple hardware qubits, which are used to run programs while also carrying out error correction. In this new effort, the team has developed a new way to find and correct such errors and has named it AlphaQubit

The new AI-based decoder is a type of deep learning neural network. The researchers first trained it to recognize errors using their Sycamore computer, running with 49 qubits and a quantum simulator. Together, the two systems generated hundreds of millions of examples of quantum errors. They then reran the Sycamore, this time using AlphaQubit to identify any generated errors, all of which were then corrected.

In so doing, they found it resulted in a 6% improvement in error correction during highly accurate but slow tests and a 30% improvement when running less accurate but faster tests. They also tested it using up to 241 qubits and found it exceeded expectations. They suggest their findings indicate machine learning may be the solution to error correction on quantum computers, allowing for concentrating on other problems yet to be overcome.

More information: Johannes Bausch et al, Learning high-accuracy error decoding for quantum processors, Nature (2024). DOI: 10.1038/s41586-024-08148-8

Nadia Haider, Quantum computing: physics–AI collaboration quashes quantum errors, Nature (2024). DOI: 10.1038/d41586-024-03557-1

blog.google/technology/google- … um-error-correction/

Journal information: Nature

Leave a Reply