November 12, 2024 by eLife

Collected at: https://phys.org/news/2024-11-method-effect-relationships-ebb.html

A new computational method can identify how cause-and-effect relationships ebb and flow over time in dynamic real-life systems such as the brain.

The method was reported today in a Reviewed Preprint published in eLife. The editors describe the work as important, presenting a novel approach for inferring causal relations in non-stationary time series data. They add that the authors provide solid evidence for their claims through thorough numerical validation and comprehensive exploration of the method on both synthetic and real-world datasets, providing a timely and important contribution with diverse real-life applications.

Naturally occurring real-life systems—such as the economy, the climate and systems in the body—rarely follow well-behaved patterns, interact in straight lines, or stay consistent in strength. Instead, links between cause and effect (called causal interactions or causality) are transient—they frequently appear, disappear, reappear and change in strength over time. This poses a challenge for scientists using computer models to predict and understand the dynamics of complex systems.

“Currently available methods for studying complex systems tend to assume that the system is approximately stationary—that is, the system’s dynamical properties stay the same over time. Other commonly introduced simplifications such as linearity and time invariance can produce incorrect expectations, ” explains lead author Josuan Calderon, who recently received his Ph.D. in the Department of Physics, Emory University, Atlanta, US. “Such systems can predict causal relationships, but often fail to quantify changes in the strength or direction of these relationships.”

To address this gap, the research team developed a novel machine-learning model called Temporal Autoencoders for Causal Inference (TACI) to identify and measure the direction and strength of causal interactions that vary over time. They adopted a two-pronged approach to ensure the model can accurately assess causality between two variables—for example, temperature and humidity—that behave in a non-linear way and fluctuate over time.

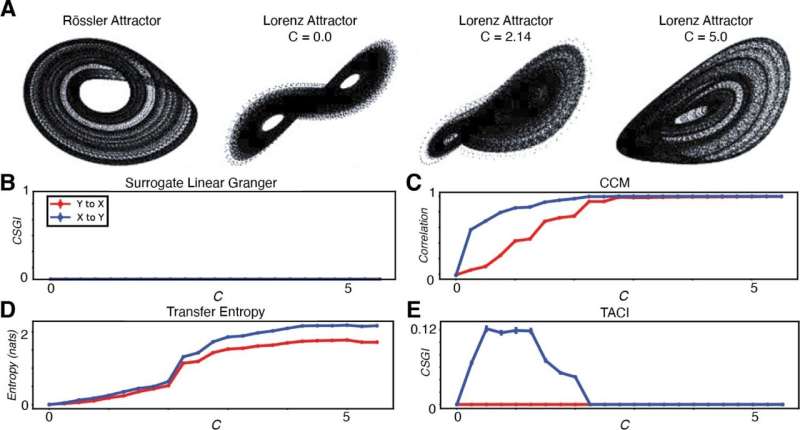

They first tested their model on simulated data and compared it to a range of existing models for assessing causality, finding that the TACI model performed well across all test cases, without incorrectly predicting non-existent interactions. But the authors were particularly keen to see how the method performed when the causal interactions changed over time.

To do this, they used an established model of a dynamic system but generated a dataset where interactions (couplings) changed over time. They carried out four different causality tests to see how TACI did and found that it performed well, identifying when cause-and effect interactions were eliminated and when the direction of the cause-and-effect relationship changed. In addition, TACI was able to detect how the strength of the causal relationship changed over time.

Next, the authors evaluated how well TACI performed with real data. They first tested it with a detailed set of weather measurements recorded from the Jena weather set in Germany. This dataset spans nearly 8 years and includes 14 distinct meteorological features recorded every 10 minutes, including a range of atmospheric conditions from temperature to relative humidity.

An advantage to using these data is that many of the genuine interactions are already known. After training the TACI model using each of the weather variables connected to temperature, they found that causal interactions peak during times when the temperature drops—demonstrating that TACI can accurately predict true variations over time from messy real-world data.

They next tested the model using brain imaging datasets from a single monkey before, during and after anesthesia. Previous studies had only been able to analyze data at the level of each time window, but TACI was able to detect larger differences. Specifically, the researchers saw almost all interactions disappear during the anesthetized period, and then begin to re-emerge during recovery.

Moreover, when taking a finer-grained look at a pair of brain regions, they found coupling between these regions reduces as the anesthetic is first administered, and rapidly increases a few minutes into the recovery period. The authors suggest these fluctuations could lead to insights into how these brain regions drive each other’s activity during cognitive tasks.

The model performed well compared to other methods but is not without its limitations, requiring significant computational power and time. The authors envision that several improvements in the network architecture and training will allow for the method to be sped up considerably.

“An advantage of our approach is being able to train a single model that captures the dynamics of a time series across all points in time, allowing for time-varying interactions to be found without retraining the model, allowing us to find patterns across an entire data set, rather than use just one small chunk at a time,” says senior author Gordon Berman, Associate Professor of Biology, Emory University.

“We believe the approach will be broadly applicable to many types of complicated time series, and it holds particular promise for brain network data, where we hope to build our understanding of how parts of the brain shift their interactions as behavioral states and needs alter in the world.”

More information: Josuan Calderon et al, Inferring the time-varying coupling of dynamical systems with temporal convolutional autoencoders, eLife (2024). DOI: 10.7554/eLife.100692.1

Journal information: eLife

Leave a Reply