By U.S. Department of Energy October 16, 2024

Collected at: https://scitechdaily.com/supercharging-scientific-discovery-with-fair-ai-models/

Adapting FAIR principles to AI models has transformed scientific research, enabling faster and more reliable results.

By integrating AI with datasets, researchers at Argonne National Laboratory have dramatically accelerated material analysis, paving the way for advanced, AI-powered scientific breakthroughs.

FAIR Principles for AI

Researchers initially proposed the FAIR principles—findable, accessible, interoperable, and reusable—to define best practices for maximizing the utilization of datasets by both researchers and machines. These principles have now been adapted for scientific datasets and research software, aiming to enhance the transparency, reproducibility, and reusability of research, as well as to promote software reuse over redevelopment.

Artificial intelligence (AI) models, which integrate various digital assets like datasets, research software, and advanced computing, now also adhere to these principles. A new paper presents a set of practical, concise, and measurable FAIR principles specifically tailored for AI models. It further details how combining FAIR AI models with datasets can significantly accelerate scientific discovery.

Advancing Scientific Discovery Through FAIR AI

This work introduces a precise definition of FAIR principles for AI models and illustrates their application in a special type of advanced microscopy. Specifically, it demonstrates the integration of FAIR datasets and AI models to characterize materials at Argonne National Laboratory’s (ANL) Advanced Photon Source, achieving results two orders of magnitude faster than traditional methods.

The study also highlights how linking ANL’s Advanced Photon Source with the Argonne Leadership Computing Facility can further enhance the speed of scientific discovery. This methodology overcomes hardware discrepancies, facilitates a unified AI language among researchers, and boosts AI-driven discoveries. The implementation of these FAIR guidelines for AI models is set to drive the development of next-generation AI technologies and foster new connections between data, AI models, and high-performance computing.

FAIR Data and AI Models in Action

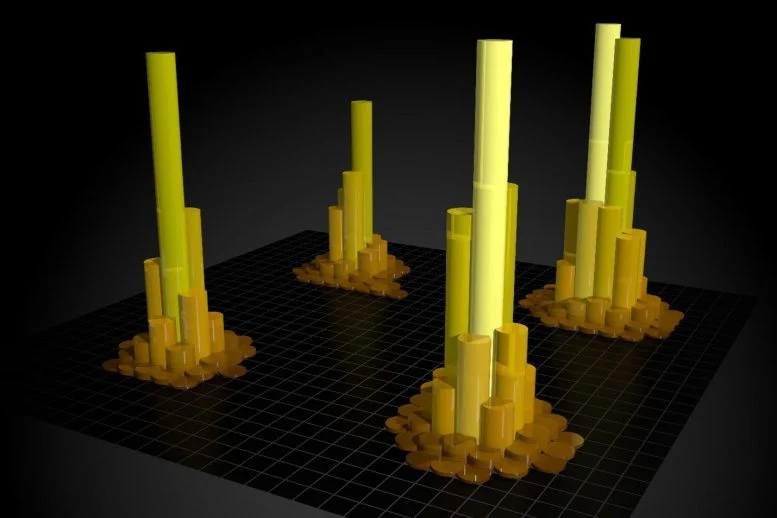

In this research, scientists produced a FAIR experimental dataset of Bragg diffraction peaks of an undeformed bi-crystal gold sample produced at the Advanced Photon Source at Argonne National Laboratory. This FAIR and AI-ready dataset was published at the Materials Data Facility.

The researchers then used this dataset to train three types of AI models at the Argonne Leadership Computing Facility (ALCF): a traditional AI model using the open-source API PyTorch; an NVIDIA TensorRT version of the traditional PyTorch AI model using the ThetaGPU supercomputer; and a model trained on the SambaNova DataScaleⓇ system at the ALCF AI Testbed. These AI models incorporate uncertainty quantification metrics that clearly indicate when AI predictions are trustworthy.

Implementing and Verifying FAIR AI Models

These three different models were then published in the Data and Learning Hub for Science following the researchers’ proposed FAIR principles for AI models. They then linked all these different resources, FAIR AI models, and datasets and used the ThetaGPU supercomputer at the ALCF to conduct reproducible AI-driven inference.

This entire workflow is orchestrated with Globus and executed with Globus Compute. The researchers developed software to automate this work and asked colleagues at the University of Illinois to independently verify the reproducibility of the findings.

Reference: “FAIR principles for AI models with a practical application for accelerated high energy diffraction microscopy” by Nikil Ravi, Pranshu Chaturvedi, E. A. Huerta, Zhengchun Liu, Ryan Chard, Aristana Scourtas, K. J. Schmidt, Kyle Chard, Ben Blaiszik and Ian Foster, 10 November 2022, Scientific Data.

DOI: 10.1038/s41597-022-01712-9

Leave a Reply