August 22, 2024 by Bob Yirka , Tech Xplore

Collected at: https://techxplore.com/news/2024-08-method-ai-indefinitely.html

A team of AI researchers and computer scientists at the University of Alberta has found that current artificial networks used with deep-learning systems lose their ability to learn during extended training on new data. In their study, reported in the journal Nature, the group found a way to overcome these problems with plasticity in both supervised and reinforcement learning AI systems, allowing them to continue to learn.

Over the past few years, AI systems have become mainstream. Among them are large language models (LLMs), which produce seemingly intelligent responses from chatbots. But one thing they all lack is the ability to continue learning as they are in use, a drawback that prevents them from growing more accurate as they are used more. They also are unable to grow any more intelligent by training on new datasets.

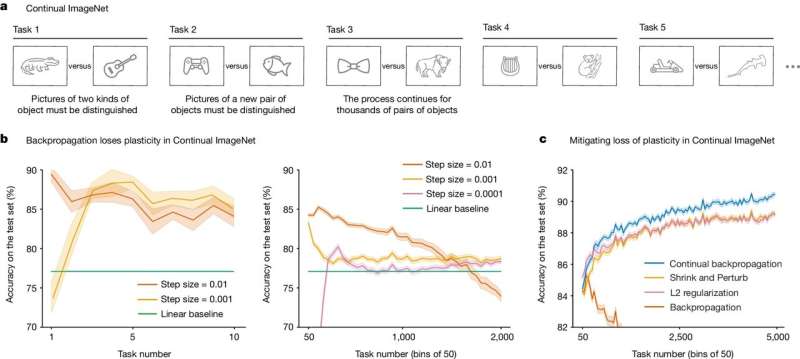

The researchers tested the ability of conventional neural networks to continue learning after training on their original datasets and found what they describe as catastrophic forgetting, in which a system loses the ability to carry out a task it was able to do after being trained on new material.

They note that this outcome is logical, considering LLMs were designed to be sequential learning systems and learn by training on fixed data sets. During testing, the research team found that the systems also lose their ability to learn altogether if trained sequentially on multiple tasks—a feature they describe as loss of plasticity. But they also found a way to fix the problem—by resetting the weights that have been previously associated with nodes on the network.

With artificial neural networks, weights are used by nodes as a measure of their strength—weights can gain or lose strength via signals sent between them, which in turn are impacted by results of mathematical calculations. As a weight increases, the importance of the information it conveys increases.

The researchers suggest that reinitializing the weights between training sessions, using the same methods that were used to initialize the system, should allow for maintaining plasticity in the system and for it to continue learning on additional training datasets.

More information: Shibhansh Dohare, Loss of plasticity in deep continual learning, Nature (2024). DOI: 10.1038/s41586-024-07711-7. www.nature.com/articles/s41586-024-07711-7

Journal information: Nature

Leave a Reply