S Akash Published on: 08 Jul 2024, 12:30 am

Collected at: https://www.analyticsinsight.net/artificial-intelligence/how-multimodal-ai-enhances-natural-interaction

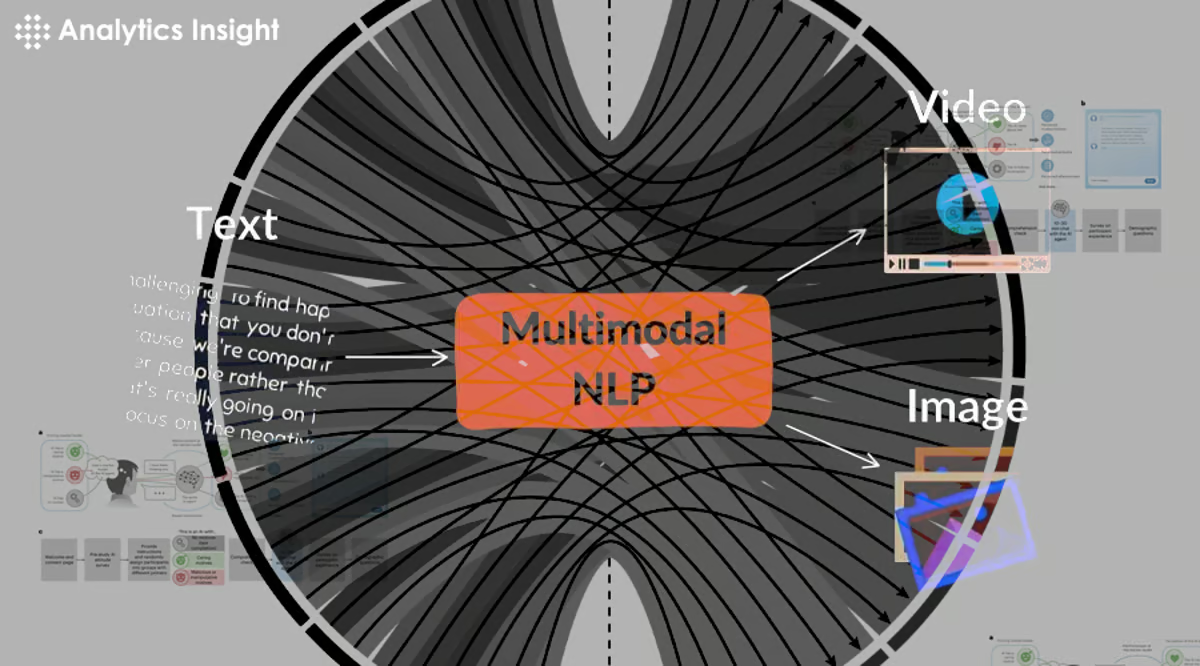

One of the drastic evolution in AI is the multimodal technology, involving multiple forms of data inputs like text, speech, image, gesture, and the enhancement of natural interaction. Such a convergence of sensory inputs allows AI systems to understand human communication more deeply in order to gain intuitive and effortless experiences in various applications and business lines.

Understanding Multimodal AI

Multimodal AI combines with different modalities of data like simple text input, complex audio and video inputs, and even sensor inputs all in a single domain, understanding user context and purpose. Unlike traditional Artificial Intelligence, which is based on single modalities, either text or voice. Multimodal AI takes synergy amongst several modalities to give enriched interactions and higher accuracy.

Key Components of Multimodal AI:

Speech Recognition: By using this technology, AI systems can recognize spoken languages by writing them down and comprehending voice commands or questions.

Natural Language Processing: Analyze and interpret textual information, which the bots understand the written input and generate relevant responses in context.

Computer Vision: This is the processing of visual information from images to videos that allows AI to identify classes of objects, faces, gestures, scenes, and so on, from visual data.

Sensor Data Integration: Integrates data from a myriad of sensors, such as accelerometers or GPS, that supply information regarding the context of a particular environment in which the user is located or any physical activity being done by him/her.

Enriching User Experience

Multimodal AI refines natural interaction into a more intuitive and a friendly user experience on varied platforms and devices. Here’s how multimodal AI technologies are changing interaction:

1. Better Accessibility

Multimodal AI opens digital interfaces to a large variety of users with different needs and preferences. For example, voice commands that come with complementary visual feedback will open up interfaces to people with different disabilities.

2. Richer Channels of Communication

AI-powered virtual assistants, such as Amazon Alexa and Google Assistant, leverage multimodal capabilities to listen with voice, display relevant information on screens, and even interpret one’s gestures or facial expressions for more subtle interactions.

3. Seamless Device Integration

Multimodal AI is very easy to integrate into different devices and platforms. Thus, one will be able to start an action on one device, such as by voice through a smart speaker, and complete it on another with the visual display on a smartphone or tablet. All of this will be continuous whilst increasing productivity.

4. Context-Aware Applications

Multimodal inputs by users can be utilized for context and AI applications can respond accordingly. For instance, speech commands, occupancy sensors, and camera visuals all influence smart lighting in a room.

Applications Across Industries

Innovation has been led by multimodal AI across various industries by the way of increasing interaction and user engagement. Some of them are in the field of artificial intelligence:

1. Health

It allows patients to naturally engage with medical devices in healthcare. For example, AI-powered virtual nurses can receive a patient’s queries in voice form for analysis of medical images for diagnostics and provide personalized health recommendations.

2. Education

Multimodal AI makes educational platforms interactive. In its application, students can engage with course materials through voice, interactive simulations, and demonstrations through methods best suited to their learning styles.

3. Automotive

Multimodal AI in automotive applications can enhance driver-vehicle interaction. Voice, gesture, and face expression could be also utilized to control some infotainment centers, navigation, and driving aids, giving the vehicle both safety and convenience.

4. Retail and Customer Service

Retailers deploy multimodal AI to improve interactions with customers. AI chatbots could identify customer inquiries through speech or text messaging and provide product recommendations based on visual preferences; they are able to try on products virtually by means of augmented reality.

Challenges and Future Directions

While multimodal AI has several noteworthy advantages, it comes with a few challenges in the process like data integration complexities, being privacy-conscious, and performance appropriateness across multifarious environments. Precisely, one way to forge ahead for further improvements in AI research will be through the improvement of multimodal fusion techniques, enhancement of real-time processing capabilities, and sober reflection on the ethical considerations including data privacy and algorithmic bias.

One of the paradigm shifts in how human communicates with the machine is multimodal AI, which makes it possible to communicate in a more natural and intuitive way through the integration of data inputs. Speech recognition, natural language processing, computer vision, and sensor data integration come together to make multimodal AI facilitate better user experiences across industries. As technology further evolves, multimodal AI will shape the future interaction making devices smarter, more responsive, and attuned to human needs and preferences.

Leave a Reply