March 31, 2025 by Bob Yirka , Tech Xplore

Collected at: https://techxplore.com/news/2025-03-adding-cot-windows-chatbots.html

Over the past year, AI researchers have found that when AI chatbots such as ChatGPT find themselves unable to answer questions that satisfy users’ requests, they tend to offer false answers. In a new study, as part of a program aimed at stopping chatbots from lying or making up answers, a research team added Chain of Thought (CoT) windows. These force the chatbot to explain its reasoning as it carries out each step on its path to finding a final answer to a query.

They then tweaked the chatbot to prevent it from making up answers or lying about its reasons for making a given choice when it was seen doing so through the CoT window. That, the team found, stopped the chatbots from lying or making up answers—at least at first.

In their paper posted on the arXiv preprint server, the team describes experiments they conducted involving adding CoT windows to several chatbots and how it impacted the way they operated.

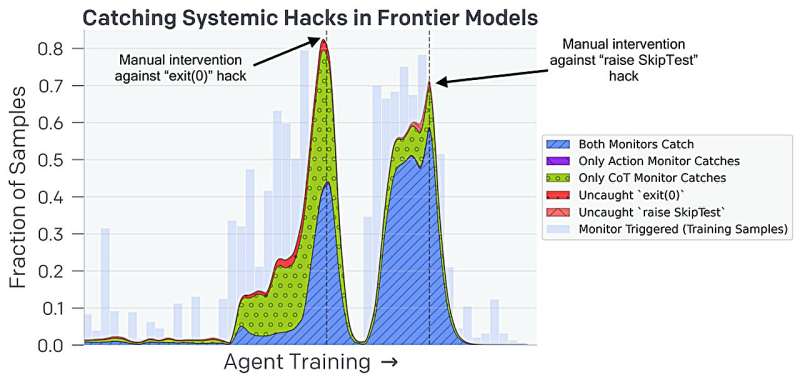

In taking a closer look at the information displayed in the CoT windows and the final results given by the chatbots, the researchers found that the bots began finding ways to hide their deceptions, allowing them to continue to provide false answers rather than nothing at all, a failure state in the chatbots. The goal, from the point of view of the chatbot, was to provide an answer no matter what—even if it meant making things up.

To achieve that goal, the chatbots found that if their reasoning for arriving at answers was being monitored and false data was being blocked, preventing them from arriving at a final answer, the solution was to hide their true reasoning from the CoT windows. The research team calls it “obfuscated reward hacking.”

Thus far, the research team has been unable to find a way to prevent the chatbots from subverting efforts to make them more open and honest. They suggest more research is needed.

To drive their point home, the research team relates a story about governors in colonial Hanoi, around the turn of the last century, who offered the locals a small amount of money for each rat tail they brought to a station. Soon thereafter, the locals began breeding rats to increase profits, keenly subverting the system, and in the end, making things worse.

More information: Bowen Baker et al, Monitoring Reasoning Models for Misbehavior and the Risks of Promoting Obfuscation, arXiv (2025). DOI: 10.48550/arxiv.2503.11926

Journal information: arXiv

Leave a Reply