November 20, 2024 by TranSpread

Collected at: https://techxplore.com/news/2024-11-edge-tech-robotic-steel-bridge.html

Orthotropic steel bridge decks (OSDs) are fundamental to long-span bridge designs, prized for their high load-carrying efficiency and lightweight characteristics. However, their intricate structure makes them vulnerable to fatigue cracking, particularly at key connection points, posing serious safety risks.

Conventional inspection methods, such as visual checks and magnetic testing, often lack the precision and reliability needed for detecting internal or subtle cracks. While Phased Array Ultrasonic Testing (PAUT) has shown promise, it has not fully resolved these challenges. Due to these persistent issues, there is a pressing need for more advanced and efficient crack detection technologies.

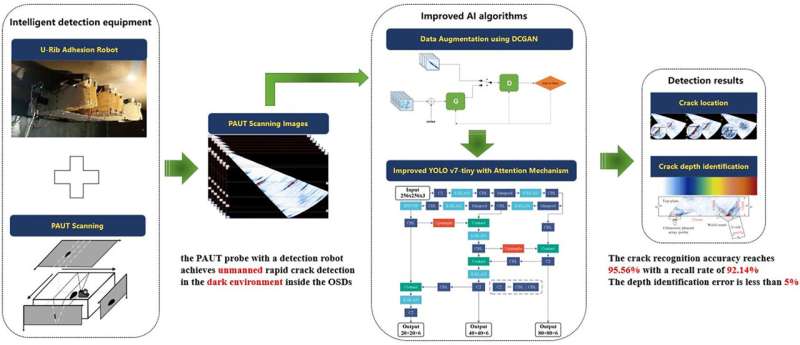

In a study conducted by teams from Southwest Jiaotong University and The Hong Kong Polytechnic University and published in the Journal of Infrastructure Intelligence and Resilience, the researchers introduce an automated system for fatigue crack detection in OSDs, using a robotic platform combined with ultrasonic phased array technology.

Enhanced by deep learning models like Deep Convolutional Generative Adversarial Network (DCGAN) for data generation and YOLOv7-tiny for high-speed, real-time crack detection, this innovative approach delivers a significant improvement in accuracy and efficiency, potentially revolutionizing bridge maintenance practices.

The study’s core innovation lies in fusing robotic automation with state-of-the-art deep learning for effective crack detection. The robotic system, equipped with a phased array ultrasonic probe, autonomously scans OSDs, significantly reducing the need for human involvement.

Researchers leveraged the DCGAN to augment PAUT image datasets, boosting the algorithm’s learning capabilities. Among various tested models, YOLOv7-tiny emerged as the most effective, offering optimal speed and precision for real-time crack localization and depth estimation.

A standout feature of this approach is the integration of attention mechanisms, which refined YOLOv7-tiny’s ability to detect even small or overlapping cracks. Additionally, a novel method of analyzing echo intensity was developed to accurately estimate crack depth, achieving a margin of error below 5% compared to Time of Flight Diffraction (TOFD) benchmarks.

This comprehensive system not only improves detection speed but also ensures reliable field performance, setting a new standard for structural health monitoring and maintenance in critical infrastructure.

Dr. Hong-ye Gou, lead researcher at Southwest Jiaotong University, stated, “Our research addresses key safety concerns in bridge maintenance by harnessing robotic automation and deep learning technologies. The result is a highly efficient system that can detect fatigue cracks with unprecedented accuracy, even in challenging conditions.

“This advancement holds tremendous potential for enhancing infrastructure safety. By precisely identifying cracks that conventional methods might overlook, our approach ensures bridges are more resilient, ultimately protecting public safety and extending the service life of these vital structures.”

This cutting-edge detection system has far-reaching applications for infrastructure maintenance and safety. By automating the inspection of OSDs, it drastically reduces the need for manual labor, minimizing human error while delivering precise, real-time results.

The technology enables early detection of structural issues, preventing catastrophic failures. Moreover, the integration of deep learning models lays the groundwork for advancements in predictive maintenance and continuous structural health monitoring, potentially lowering maintenance costs and extending the lifespan of key transportation networks, ensuring their reliability for future generations.

More information: Fei Hu et al, Automatic PAUT crack detection and depth identification framework based on inspection robot and deep learning method, Journal of Infrastructure Intelligence and Resilience (2024). DOI: 10.1016/j.iintel.2024.100113

Leave a Reply