November 12, 2024 by Bob Yirka , Tech Xplore

Collected at: https://techxplore.com/news/2024-11-virtual-generative-ai-robots-traverse.html

A team of roboticists and engineers at MIT CSAIL, Institute for AI and Fundamental Interactions, has developed a generative AI approach to teaching robots how to traverse terrain and move around objects in the real world.

The group has published a paper describing their work and possible uses for it on the arXiv preprint server. They also presented their ideas at the recent Conference on Robot Learning (CORL 2024), held in Munich Nov. 6–9.

Getting robots to navigate in the real world at some point involves teaching them to learn on the fly, or by training them with videos of similar robots in a real-world environment. While such training has proven to be effective in limited environments, it tends to fail when a robot encounters something novel. In this new effort, the team at MIT developed virtual training that better translates to the real world.Play

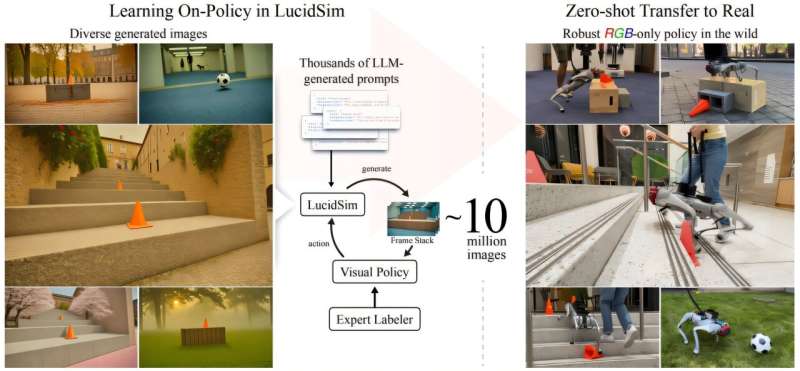

The work involved using generative AI and a physics simulator to allow a robot to navigate a virtual world as a means for learning to operate in the real world. They call the system LucidSim and have used it to train a robotic dog in parkour, a sport where players attempt to traverse obstacles in unknown territory as quickly as possible.

The approach involves first prompting ChatGPT with thousands of queries designed to get the LLM to create descriptions of a wide range of environments, including outdoor weather. Next, the descriptions given by ChatGPT are fed to a 3D mapping system that uses them (along with AI generated images and physics simulators) to generate a video that also gives a trajectory for the robot to follow.

The robot is then trained to make its way through the terrain in the virtual world and learn skills that it can use in a real environment. Robots trained using the system learned to clamber over boxes, climb stairs and deal with whatever they encountered. After virtual training, the robot was tested in the real world.

The researchers tested their system using a small, four-legged robot equipped with a webcam. They found it performed better than a similar system trained the traditional way. The team suggests that improvements to their system could lead to a new approach to training robots in general.

More information: Alan Yu et al, Learning Visual Parkour from Generated Images, arXiv (2024). DOI: 10.48550/arxiv.2411.00083

LucidSim: lucidsim.github.io/

Journal information: arXiv

Leave a Reply