November 8, 2024 by Intelligent Computing

Collected at: https://techxplore.com/news/2024-11-deep-advancing-affective-diverse-ai.html

Affective computing, a field focused on understanding and emulating human emotions, has seen significant advancements thanks to deep learning. However, researchers at the Technical University of Munich caution that an over-reliance on deep learning may hinder progress by overlooking other emerging trends in artificial intelligence.

Their review, published Sep. 16 in Intelligent Computing, advocates using a variety of AI methodologies to tackle ongoing challenges in affective computing.

Affective computing uses various signals, such as facial expressions, voice and language cues, alongside physiological signals and wearable sensors to analyze and synthesize affect. While deep learning has significantly improved tasks like emotion recognition through innovations in transfer learning, self-supervised learning and transformer architectures, it also presents challenges, including poor generalization, cultural adaptability issues and a lack of interpretability.

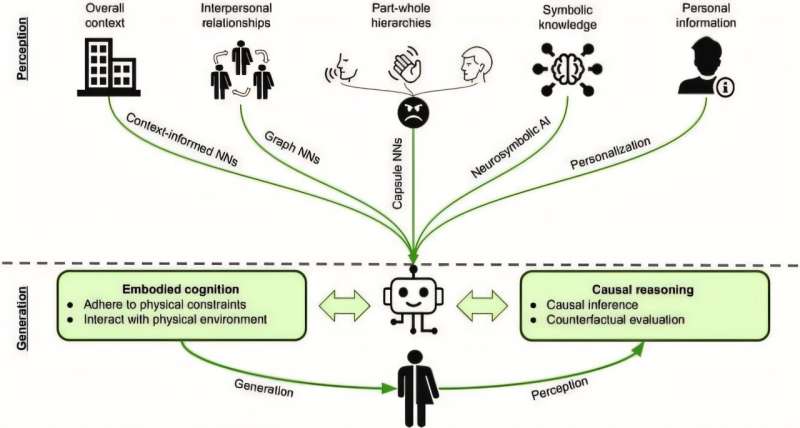

To address these limitations, the authors outline a comprehensive framework for developing embodied agents capable of interacting with multiple users in many different contexts. A key to this vision is the assessment of users’ goals, mental states and interrelationships for the purpose of facilitating longer interactions. The authors recommend integrating the following nine components, which they describe in detail, to improve human-agent interactions:

- Graphs that map user relationships and context.

- Capsules that model hierarchies for understanding affective interactions.

- Neurosymbolic Engines that facilitate reasoning about interactions using affective primitives.

- Symbols that establish common knowledge and rules for interaction.

- Embodiment that enables collaborative learning in constrained environments.

- Personalization that tailors interactions to individual user characteristics.

- Generative AI that creates responses across multiple modalities.

- Causal models that differentiate causes and effects for higher-order reasoning.

- Spiking neural networks that enhance deployment of deep neural networks in resource-limited settings.

The authors also describe several next-generation neural networks, resurgent themes and new frontiers in affective computing.

Next-generation neural networks are advancing beyond traditional deep learning models to address limitations in capturing complex data structures, spatial relationships and energy efficiency. Capsule networks enhance convolutional networks by preserving spatial hierarchies, improving the modeling of complex entities, such as human body parts, which is vital in health care and emotion recognition.

Geometric deep learning extends deep learning to non-Euclidean structures, allowing for a better understanding of complex data interactions. It has been particularly useful in sentiment and facial analysis. Mimicking the threshold-based firing of biological neurons, spiking neural networks offer a more energy-efficient alternative for real-time applications, making them suitable for environments with limited resources.

Traditional AI concepts, adapted to new contexts, can improve affective computing applications. Neurosymbolic systems show particular promise, combining the pattern recognition of deep learning with symbolic reasoning from traditional AI to improve the explainability and robustness of deep learning models.

As these models enter real-world settings, they must conform to social norms, enhancing their ability to interpret emotions across cultures. Embodied cognition furthers this goal by situating AI agents in physical or simulated contexts, supporting natural interactions. Through reinforcement learning, embodied agents can achieve better situatedness and interactivity, which is especially beneficial in complex fields such as health care and education.

In addition, three substantial ideas have emerged in affective computing in recent years: generative models, personalization, and causal reasoning. Advances in generative models, especially diffusion-based processes, enable AI to produce contextually relevant emotional expressions across various media, paving the way for interactive, embodied agents.

Moving beyond one-size-fits-all models, personalization adapts responses based on user-personalized characteristics while maintaining data privacy through federated learning. By incorporating causal reasoning, affective computing systems can not only associate but also intervene and counterfactualize in emotional contexts, enhancing their adaptability and transparency.

The future of affective computing could hinge on combining innovation and a variety of AI methodologies. Moving beyond a deep learning-centric approach could pave the way for more sophisticated, culturally aware, and ethically designed systems. The integration of multiple approaches promises a future where technology not only understands but also enriches human emotions, marking a significant leap towards truly intelligent and empathetic AI.

More information: Andreas Triantafyllopoulos et al, Beyond Deep Learning: Charting the Next Frontiers of Affective Computing, Intelligent Computing (2024). DOI: 10.34133/icomputing.0089

Leave a Reply