October 30, 2024 by Lynn Shea, Carnegie Mellon University Mechanical Engineering

Collected at: https://techxplore.com/news/2024-10-ai-generates-temperature.html

Before engineers at Carnegie Mellon University begin conducting additive manufacturing (AM) experiments in two dedicated labs that are equipped with an impressive range of 3D printing equipment, they often times rely on the power of artificial intelligence to develop models that can be used to monitor and control the build process. Among the many models they are currently developing and testing is one that can precisely monitor and control temperature.

Temperature affects melt pool geometry, defect formation, and microstructure evolution. So, the ability to accurately predict and control temperature is an important stepping stone toward optimizing the additive manufacturing process and ensuring the quality of printed parts.

Currently, neither in-situ thermal sensors nor computer modeling methods have been able to accurately reconstruct the full 3-dimensional temperature profile in real time.

Existing in-situ thermal sensors, including thermocouples, infrared (IR) cameras, and multi-wavelength pyrometers that have been employed to monitor the part temperature during processing provide only partial measurements of the temperature distribution over the part.

Data-driven models can only provide accurate predictions over a short time horizon because of errors that tend to accumulate over time. And high-fidelity numerical models can generate temperature distributions for the entire part, but they are not suitable for real-time monitoring due to their high computational demands.

Jiangce Chen, a postdoctoral fellow with the Manufacturing Futures Institute, working under the direction of Sneha Prabha Narra and Christopher McComb, has found a way to reconstruct the complete temperature profile in real time using only the partial data available from in situ sensors.

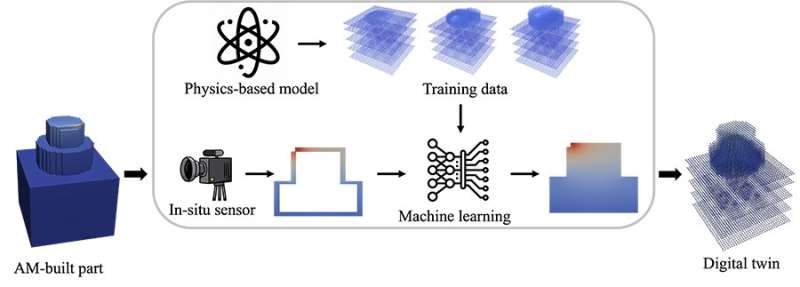

Inspired by image inpainting, a well-established computer vision and image processing technique, Chen has developed a hybrid framework that utilizes a combination of thermal sensors, numerical simulations, and machine learning (ML) to effectively restore the complete temperature history of additive manufactured parts.

“We wanted to use computational tools to show how heat evolves throughout the AM build process,” explained Chen, who added that, “By monitoring the temperature in real time, we can develop automated systems that could make adjustments that produce the qualified parts needed in demanding applications such as aerospace.”

The collaboration between engineers with strong backgrounds in both additive manufacturing and advanced computational tools is what can drive such advances.

“What is really unique about this new model is its ability to train on simulation data. It’s super expensive to run these AM experiments, so we are excited to push how far we can get with simulations,” explained McComb, an associate professor of mechanical engineering.

The framework that Chen developed feeds the partial temperature distribution data obtained from thermal sensors into an ML model similar to an image inpainting model, which instead of generating missing parts of an image, generates the temperature distribution over the entire part.

Their inpainting model is trained on a dataset generated by a numerical model calibrated using experimental data and is capable of restoring the full part temperature distribution in real time. The numerical modeling dataset contains temperature histories of parts with various geometries, which will enable the inpainting model to be generalized across the wide range of part geometries that AM is used to produce.

Because inpainting methods have traditionally been applied to the restoration of 2D structured data, such as images, Chen and his team had to find a way to simulate diverse geometric models for the AM process that exist in 3D.

By employing graph convolutional neural networks (GCNNs) that can be used to represent unstructured data instead of the convolutional neural networks (CNNs) that are ineffective for 3D parts with various geometries, their method allows them to model the layer-by-layer construction of 3D printed parts.

To evaluate the performance of the inpainting model on individual geometries, they trained the model separately on the training data of each geometry and tested it on the test data from the same geometry. The ML model performed exceptionally well in capturing the physical patterns of temperature distribution in test data that shares similar geometry and is generated using the same numerical model as the training data.

To assess the generalizability of the ML model to unseen geometries during the training process, they also conducted 10-fold leave-one-out cross-validation. In each round, the data of nine out of the 10 geometric models were used for training, while the remaining one was used for validation.

Considering the small scale of the training dataset, consisting of only nine geometries, the overall performance of the ML model on the other validation rounds was remarkably good. This implies that the generalizability of the ML model could be enhanced by expanding the training dataset to include a larger variety of geometries.

Finally, the researchers assessed the performance of the ML model on the experimental data of wall-fabrication process to determine how different treatments of training data affected its performance.

In the first training process, the ML model was trained on the “normal data” consisting of all simulation data from the complicate geometric models except the wall. In the second process, the ML model was trained exclusively on the “wall simulation data.” And, in the third process, they augmented the “normal data” with the “wall simulation data.”

What they found was that there were significant differences in the simulation data and the experimental data. When the ML model was trained solely on the wall building simulation data, it failed to effectively capture the patterns present in the experimental data.

However, the augmented data process consistently demonstrated steady improvements during training and achieved the best performance among the three processes, indicating that the ML model is capable of synthesizing the patterns it learns from other geometries with the geometric features it learns from the wall simulation data. By integrating this knowledge, the ML model can better predict the experimental data of wall building.

In addition to the promising results of this work, researchers are confident that they can make further improvements. By employing more advanced physics-based numerical models to enhance the quality of the training dataset, researchers will be able to improve its fidelity in real-world applications. They also believe that further research is needed to examine how data resolution affects the ML model’s ability to maintain high accuracy.

Researchers also want to gather more information about IR cameras’ ability to capture the entire boundary of an object’s complex geometry and expand future work to include the use of thermal sensing system which involves multiple types of thermal sensors, such as two-color cameras and thermocouples.

Leave a Reply