September 26, 2024 by Adam Zewe, Massachusetts Institute of Technology

Collected at: https://phys.org/news/2024-09-protocol-leverages-quantum-mechanics-shield.html

Deep-learning models are being used in many fields, from health care diagnostics to financial forecasting. However, these models are so computationally intensive that they require the use of powerful cloud-based servers.

This reliance on cloud computing poses significant security risks, particularly in areas like health care, where hospitals may be hesitant to use AI tools to analyze confidential patient data due to privacy concerns.

To tackle this pressing issue, MIT researchers have developed a security protocol that leverages the quantum properties of light to guarantee that data sent to and from a cloud server remain secure during deep-learning computations.

By encoding data into the laser light used in fiber optic communications systems, the protocol exploits the fundamental principles of quantum mechanics, making it impossible for attackers to copy or intercept the information without detection.

Moreover, the technique guarantees security without compromising the accuracy of the deep-learning models. In tests, the researchers demonstrated that their protocol could maintain 96% accuracy while ensuring robust security measures.

“Deep learning models like GPT-4 have unprecedented capabilities but require massive computational resources.

“Our protocol enables users to harness these powerful models without compromising the privacy of their data or the proprietary nature of the models themselves,” says Kfir Sulimany, an MIT postdoc in the Research Laboratory for Electronics (RLE) and lead author of a paper posted to the arXiv preprint server on this security protocol.

Sulimany is joined on the paper by Sri Krishna Vadlamani, an MIT postdoc; Ryan Hamerly, a former postdoc now at NTT Research, Inc.; Prahlad Iyengar, an electrical engineering and computer science (EECS) graduate student; and senior author Dirk Englund, a professor in EECS, principal investigator of the Quantum Photonics and Artificial Intelligence Group and of RLE.

The research was recently presented at the Annual Conference on Quantum Cryptography (Qcrypt 2024).

A two-way street for security in deep learning

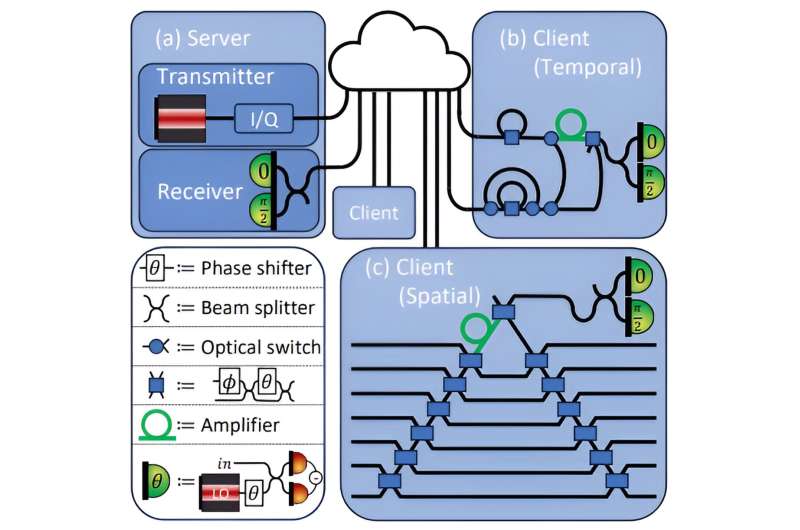

The cloud-based computation scenario the researchers focused on involves two parties—a client that has confidential data, like medical images, and a central server that controls a deep learning model.

The client wants to use the deep-learning model to make a prediction, such as whether a patient has cancer based on medical images, without revealing information about the patient.

In this scenario, sensitive data must be sent to generate a prediction. However, during the process the patient data must remain secure.

Also, the server does not want to reveal any parts of the proprietary model that a company like OpenAI spent years and millions of dollars building.

“Both parties have something they want to hide,” adds Vadlamani.

In digital computation, a bad actor could easily copy the data sent from the server or the client.

Quantum information, on the other hand, cannot be perfectly copied. The researchers leverage this property, known as the no-cloning principle, in their security protocol.

For the researchers’ protocol, the server encodes the weights of a deep neural network into an optical field using laser light.

A neural network is a deep-learning model that consists of layers of interconnected nodes, or neurons, that perform computation on data. The weights are the components of the model that do the mathematical operations on each input, one layer at a time. The output of one layer is fed into the next layer until the final layer generates a prediction.

The server transmits the network’s weights to the client, which implements operations to get a result based on their private data. The data remain shielded from the server.

At the same time, the security protocol allows the client to measure only one result, and it prevents the client from copying the weights because of the quantum nature of light.

Once the client feeds the first result into the next layer, the protocol is designed to cancel out the first layer so the client can’t learn anything else about the model.

“Instead of measuring all the incoming light from the server, the client only measures the light that is necessary to run the deep neural network and feed the result into the next layer. Then the client sends the residual light back to the server for security checks,” Sulimany explains.

Due to the no-cloning theorem, the client unavoidably applies tiny errors to the model while measuring its result. When the server receives the residual light from the client, the server can measure these errors to determine if any information was leaked. Importantly, this residual light is proven to not reveal the client data.

A practical protocol

Modern telecommunications equipment typically relies on optical fibers to transfer information because of the need to support massive bandwidth over long distances. Because this equipment already incorporates optical lasers, the researchers can encode data into light for their security protocol without any special hardware.

When they tested their approach, the researchers found that it could guarantee security for server and client while enabling the deep neural network to achieve 96% accuracy.

The tiny bit of information about the model that leaks when the client performs operations amounts to less than 10% of what an adversary would need to recover any hidden information. Working in the other direction, a malicious server could only obtain about 1% of the information it would need to steal the client’s data.

“You can be guaranteed that it is secure in both ways—from the client to the server and from the server to the client,” Sulimany says.

“A few years ago, when we developed our demonstration of distributed machine learning inference between MIT’s main campus and MIT Lincoln Laboratory, it dawned on me that we could do something entirely new to provide physical-layer security, building on years of quantum cryptography work that had also been shown on that testbed,” says Englund.

“However, there were many deep theoretical challenges that had to be overcome to see if this prospect of privacy-guaranteed distributed machine learning could be realized. This didn’t become possible until Kfir joined our team, as Kfir uniquely understood the experimental as well as theory components to develop the unified framework underpinning this work.”

In the future, the researchers want to study how this protocol could be applied to a technique called federated learning, where multiple parties use their data to train a central deep-learning model. It could also be used in quantum operations, rather than the classical operations they studied for this work, which could provide advantages in both accuracy and security.

“This work combines in a clever and intriguing way techniques drawing from fields that do not usually meet, in particular, deep learning and quantum key distribution. By using methods from the latter, it adds a security layer to the former, while also allowing for what appears to be a realistic implementation.

“This can be interesting for preserving privacy in distributed architectures. I am looking forward to seeing how the protocol behaves under experimental imperfections and its practical realization,” says Eleni Diamanti, a CNRS research director at Sorbonne University in Paris, who was not involved with this work.

More information: Kfir Sulimany et al, Quantum-secure multiparty deep learning, arXiv (2024). DOI: 10.48550/arxiv.2408.05629

Journal information: arXiv

Leave a Reply