By ZACH WINN, MASSACHUSETTS INSTITUTE OF TECHNOLOGY JUNE 30, 2024

The startup Augmental allows users to operate phones and other devices using their tongue, mouth, and head gestures.

Tomás Vega developed the MouthPad through his startup, Augmental, to help people with disabilities interact with technology using just their tongues and head movements. His journey from a technology-enthused child to an innovative CEO underscores his commitment to enhancing life for those with physical limitations.

Early Inspirations and Technology’s Role

When Tomás Vega SM ’19 was 5 years old, he began to stutter. The experience gave him an appreciation for the adversity that can come with a disability. It also showed him the power of technology.

“A keyboard and a mouse were outlets,” Vega says. “They allowed me to be fluent in the things I did. I was able to transcend my limitations in a way, so I became obsessed with human augmentation and with the concept of cyborgs. I also gained empathy. I think we all have empathy, but we apply it according to our own experiences.”

Augmenting Abilities Through Technology

Vega has been using technology to augment human capabilities ever since. He began programming when he was 12. In high school, he helped people manage disabilities including hand impairments and multiple sclerosis. In college, first at the University of California at Berkeley and then at MIT, Vega built technologies that helped people with disabilities live more independently.

Today Vega is the co-founder and CEO of Augmental, a startup deploying technology that lets people with movement impairments seamlessly interact with their personal computational devices.

Innovating With the MouthPad

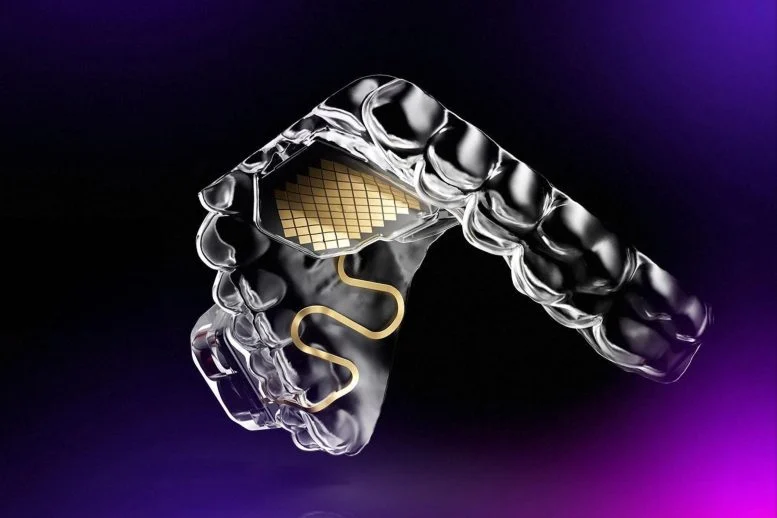

Augmental’s first product is the MouthPad, which allows users to control their computer, smartphone, or tablet through tongue and head movements. The MouthPad’s pressure-sensitive touch pad sits on the roof of the mouth, and, working with a pair of motion sensors, translates tongue and head gestures into cursor scrolling and clicks in real time via Bluetooth.

“We have a big chunk of the brain that is devoted to controlling the position of the tongue,” Vega explains. “The tongue comprises eight muscles, and most of the muscle fibers are slow-twitch, which means they don’t fatigue as quickly. So, I thought why don’t we leverage all of that?”

Impact of Augmental’s Technologies

People with spinal cord injuries are already using the MouthPad every day to interact with their favorite devices independently. One of Augmental’s users, who is living with quadriplegia and studying math and computer science in college, says the device has helped her write math formulas and study in the library — use cases where other assistive speech-based devices weren’t appropriate. (See video below.)

“She can now take notes in class, she can play games with her friends,” Vega says. “She is more independent. Her mom told us that getting the MouthPad was the most significant moment since her injury.”

That’s the ultimate goal of Augmental: to improve the accessibility of technologies that have become an integral part of our lives.

“We hope that a person with a severe hand impairment can be as competent using a phone or tablet as somebody using their hands,” Vega says.

Educational and Professional Journey

In 2012, as a first-year student at UC Berkeley, Vega met his eventual Augmental co-founder, Corten Singer. That year, he told Singer he was determined to join the Media Lab as a graduate student, something he achieved four years later when he joined the Media Lab’s Fluid Interfaces research group run by Pattie Maes, MIT’s Germeshausen Professor of Media Arts and Sciences.

“I only applied to one program for grad school, and that was the Media Lab,” Vega says. “I thought it was the only place where I could do what I wanted to do, which is augmenting human ability.”

Development of Assistive Technologies

At the Media Lab, Vega took classes in microfabrication, signal processing, and electronics. He also developed wearable devices to help people access information online, improve their sleep, and regulate their emotions.

“At the Media Lab, I was able to apply my engineering and neuroscience background to build stuff, which is what I love doing the most,” Vega says. “I describe the Media Lab as Disneyland for makers. I was able to just play, and to explore without fear.”

Vega had gravitated toward the idea of a brain-machine interface, but an internship at Neuralink made him seek out a different solution.

“A brain implant has the highest potential for helping people in the future, but I saw a number of limitations that pushed me from working on it right now,” Vega says. “One is the long timeline for development. I’ve made so many friends over the past years that needed a solution yesterday.”

At MIT, he decided to build a solution with all the potential of a brain implant but without the limitations.

The Birth of Augmental

In his last semester at MIT, Vega built what he describes as “a lollipop with a bunch of sensors” to test the mouth as a medium for computer interaction. It worked beautifully.

“At that point, I called Corten, my co-founder, and said, ‘I think this has the potential to change so many lives,’” Vega says. “It could also change the way humans interact with computers in the future.”

Vega used MIT resources including the Venture Mentoring Service, the MIT I-Corps program, and crucial early funding from MIT’s E14 Fund. Augmental was officially born when Vega graduated from MIT at the end of 2019.

Augmental generates each MouthPad design using a 3D model based on a scan of the user’s mouth. The team then 3-D prints the retainer using dental-grade materials and adds the electronic components.

With the MouthPad, users can scroll up, down, left, and right by sliding their tongue. They can also right click by doing a sipping gesture and left click by pressing on their palate. For people with less control of their tongue, bites, clenches, and other gestures can be used, and people with more neck control can use head-tracking to move the cursor on their screen.

“Our hope is to create an interface that is multimodal, so you can choose what works for you,” Vega says. “We want to be accommodating to every condition.”

Scaling the MouthPad

Many of Augmental’s current users have spinal cord injuries, with some users unable to move their hands and others unable to move their heads. Gamers and programmers have also used the device. The company’s most frequent users interact with the MouthPad every day for up to nine hours.

“It’s amazing because it means that it has really seamlessly integrated into their lives, and they are finding lots of value in our solution,” Vega says.

Augmental is hoping to gain U.S. Food and Drug Administration clearance over the next year to help users do things like control wheelchairs and robotic arms. FDA clearance will also unlock insurance reimbursements for users, which will make the product more accessible.

Long-Term Vision and the Role of AI

Augmental is already working on the next version of its system, which will respond to whispers and even more subtle movements of internal speech organs.

“That’s crucial to our early customer segment because a lot of them have lost or have impaired lung function,” Vega says.

Vega is also encouraged by progress in AI agents and the hardware that goes with them. No matter how the digital world evolves, Vega believes Augmental can be a tool that can benefit everyone.

“What we hope to provide one day is an always-available, robust, and private interface to intelligence,” Vega says. “We think that this is the most expressive, wearable, hands-free input system that humans have created.”

Leave a Reply