DECEMBER 19, 2022 by Ingrid Fadelli, Tech Xplore

Simultaneous localization and mapping (SLAM) is a promising technology that can be used to improve the navigation of autonomous systems, helping them to map their surrounding environment and track other objects within it. So far, it has primarily been applied to terrestrial vehicles and mobile robots, yet it could also potentially be expanded to spacecraft.

Researchers at Georgia Institute of Technology (Georgia Tech) and the NASA Goddard Space Flight Center recently created AstroSLAM, a SLAM-based algorithm that could allow spacecraft to navigate more autonomously. The new solution, introduced in a paper pre-published on arXiv, could be particularly useful in instances where space systems are navigating around a small celestial body, such as an asteroid.

“Our recent work is part of a NASA-funded ESI (Early-Stage Innovations) program whose objective was to make future spacecraft destined for deep-space missions (e.g., visiting and surveying asteroids) more autonomous,” Panagiotis Tsiotras, one of the researchers who carried out the study, told TechXplore.

“This problem is of great interest since, owing to the large distances from Earth, it is difficult to execute the required maneuvers around the asteroid in a real-time manner. Instead, the current process requires a large team of human operators on the ground to downlink the images captured from the spacecraft and to analyze them offline to create digital terrain maps, which amounts to carefully choreographing the spacecraft maneuvers.”

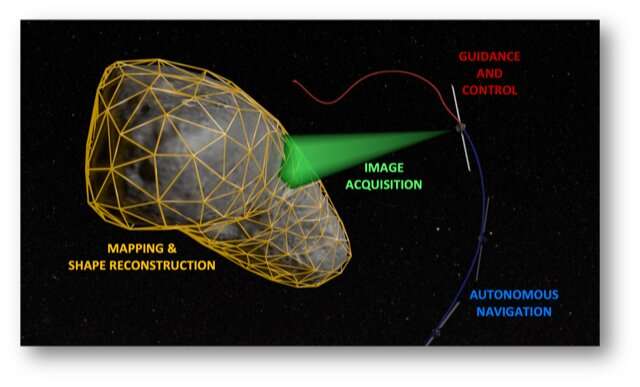

Ensuring that spacecraft move in desired ways around asteroids is a laborious, tedious and time-consuming task for human agents on Earth. A model that can autonomously reconstruct the shape of nearby asteroids and navigate the spacecraft with minimal intervention from Earth would thus be incredibly valuable, as it could facilitate and potentially speed up deep-space missions.

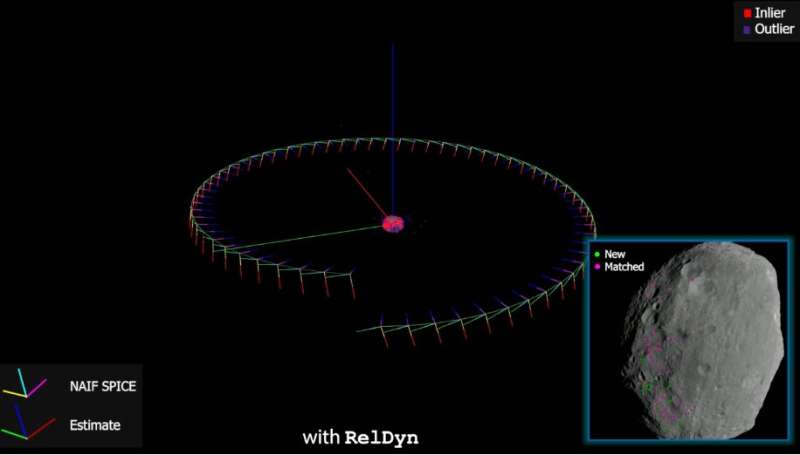

AstroSLAM, the solution developed by Tsiotras and his colleagues, can autonomously generate the location and orientation of spacecraft relative to that of nearby asteroids or other small celestial bodies. It achieves this by analyzing a sequence of images taken from a camera onboard the spacecraft as it is orbiting the celestial body of interest.

“AstroSLAM, as its name suggests, is based on SLAM, a methodology that has so far been used with great success in terrestrial mobile robots, but which we not extended to the space environment,” Tsiotras explained. “Our model can also generate a 3D shape representation of small celestial bodies and estimate their size and gravitational parameters. The algorithm is the culmination of more than five years of work in vision-based relative navigation for spacecraft in my group, the Dynamics and Control Systems Laboratory at Georgia Tech.”

AstroSLAM can estimate the relative position and orientation of spacecraft in full autonomy. This information can then be used to plan and execute various maneuvers in orbit, including landing on a nearby celestial body. The model can also generate images of the 3D shape of the nearby celestial body, estimating its size and gravitational parameters.

“One of the novelties of AstroSLAM is that it takes into account the motion constraints stemming from the orbital dynamics, thus providing a much more accurate navigation solution,” Tsiotras said.

“AstroSLAM reduces a spacecraft’s reliance on the human ground crew to run complex computations, thus increasing its autonomy and relative navigation capabilities. Even if we continue to rely on existing well-tested methodologies for the foreseeable future, the proposed approach can also serve as a ‘back-up’ solution in case the primary approach fails, as it relies on just a single camera.”

The researchers evaluated their technology in a series of tests, using real data captured by NASA during legacy space missions and high-fidelity artificial data generated using a spacecraft simulator at Georgia Tech. Their findings were very promising, suggesting that AstroSLAM could eventually enable the autonomous operation of spacecraft in various scenarios.

“We are currently working on improving the image processing step of AstroSLAM (e.g., salient feature detection and tracking), by leveraging a state-of-the-art neural-network architecture trained on a large database of real images of asteroids from prior NASA missions to detect more reliable, salient surface features,” Tsiotras added. “Once integrated with AstroSLAM, this work is expected to increase the reliability and robustness against incorrect measurements (outliers) and difficult illumination conditions.”

Tsiotras and his colleagues are now also working to allow the model to merge images from visible light and infrared light, to attain even better performances. Finally, they wish to extend their approach to operational scenarios in which the images would be captured by multiple spacecraft in orbit concurrently.

“Small celestial bodies, such as asteroids, comets, and planetary moons, are fascinating and scientifically-valuable targets for exploration,” said Kenneth Getzandanner, co-author of the paper and flight dynamics lead for Space Science Mission Operations at the NASA Goddard Space Flight Center.

“Missions to these objects, however, present unique challenges to navigation and operations given the object’s small size and the magnitude of perturbing forces relative to gravity. Recent small body missions, including the Origins, Spectral Interpretation, Resource Identification—Security Regolith Explorer (OSIRIS-REx) at the near-Earth asteroid 101955 Bennu, exemplify these challenges and require extensive characterization campaigns and significant ground-in-the-loop interaction. Technologies such as AstroSLAM are useful for simplifying operations, reducing reliance on ground assets and personnel for near real-time operations, and enabling more ambitious mission concepts and near-surface sorties.”

More information: Mehregan Dor et al, AstroSLAM: Autonomous Monocular Navigation in the Vicinity of a Celestial Small Body—Theory and Experiments, arXiv (2022). DOI: 10.48550/arxiv.2212.00350

Journal information: arXiv

Leave a Reply